❝本文转自 Edwardesire,原文:https://edwardesire.com/posts/crash-into-endpoints-between-cni-in-k8s/,版权归原作者所有。

问题描述

服务的部署形式是三副本,每个 pod 包含 2 个容器。一个容器 A 通过 k8s lease 资源选主。一个容器 B 通过 service 对外提供服务,通过 readinessProbe 暴露容器的就绪状态。

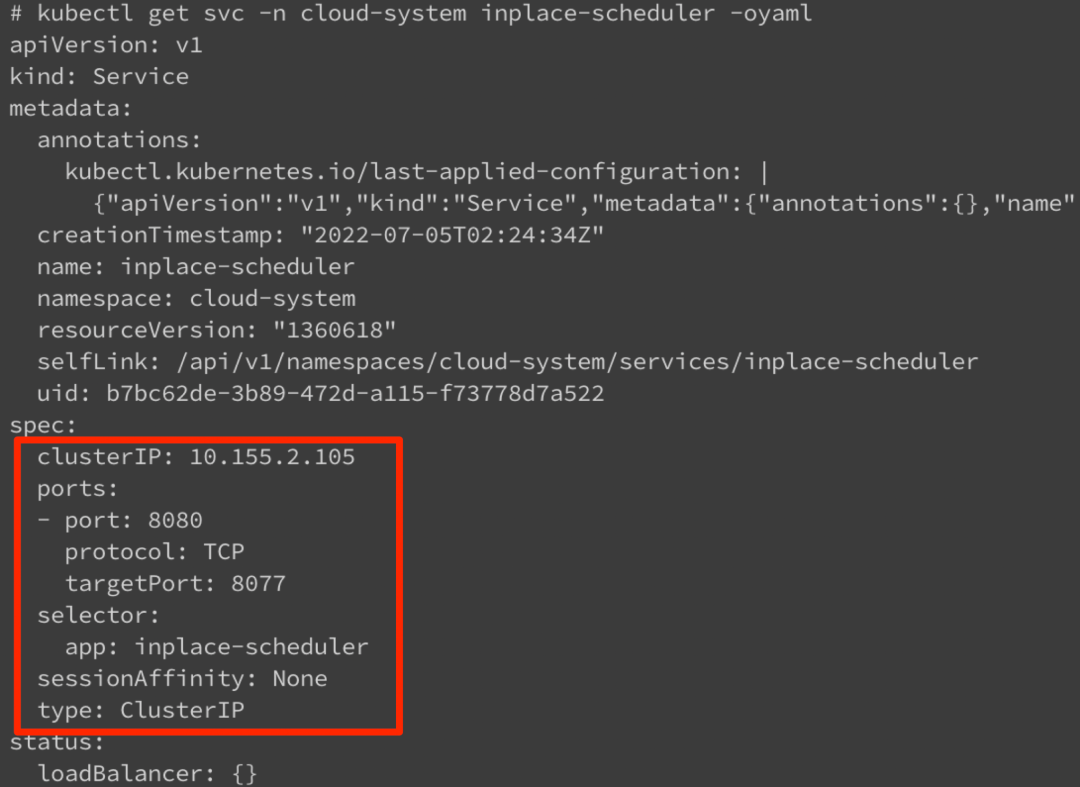

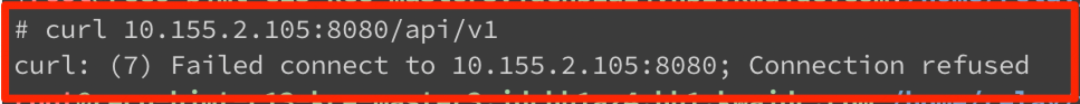

但是通过 cluster IP 访问服务超时,直接访问后端 endpoints 可以通。

排查原因

首先判断问题的范围。这个情况只发生在新搭建的集群(k8s v1.17.4)上,新集群的变化是上线新的容器网络插件。

通过 cluster ip 访问不了容器服务

通过 endpoint ip 可以服务容器服务

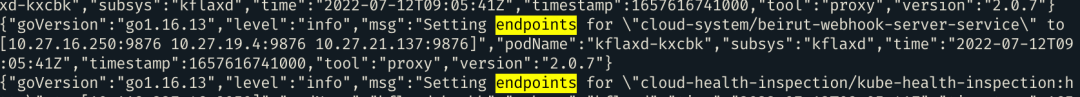

通过 cni 插件的日志可以判断功能正常:能够收到 service 的 endpoints 事件

再比较不同 endpoints 的差异,无法访问的 enpoints 有选主的标签。终确定原因是 cni 插件为了降低选主的 endpoints 频繁变更带来的损耗,过滤掉了这些 endpoints。

底层逻辑:K8s 选主实现

client-go 中的 leaderelection 支持 5 种类型的资源锁:

const (

LeaderElectionRecordAnnotationKey = "control-plane.alpha.kubernetes.io/leader"

EndpointsResourceLock = "endpoints"

ConfigMapsResourceLock = "configmaps"

LeasesResourceLock = "leases"

EndpointsLeasesResourceLock = "endpointsleases"

ConfigMapsLeasesResourceLock = "configmapsleases"

)

这 5 种资源锁也是随着时间发展不断完善的。早期版本的 client-go 只支持使用 Endpoints 和 ConfigMaps 作为资源锁。由于 Endpoints 和 ConfigMaps 本身需要被集群内多个组件监听,使用这两种类型资源作为选主锁会显着增加监听他们的组件的事件数量。 如 Kube-Proxy。这个问题在社区也被多次讨论。

master leader election shouldn’t consume O(nodes) watch bandwidth[1] Leader election causes a lot of endpoint updates[2]

为了解决这个问题,社区新增了 Leases 类型的资源锁。

Add Lease implementation to leaderelection package[3]

很明显,用一个专门的类型做资源锁比复用 Endpoints 和 ConfigMaps 是一个更理想的方案。在社区的讨论中,也是推荐使用 Lease Object 替代 Endpoints 和 ConfigMaps 做选主。

❝It was a mitigation, not the fix. The real fix is to switch leader election to be based on Lease object instead of Endpoints or ConfiMap. So basically #80289[4]

而对于之前已经存在使用 Endpoints/ConfigMaps 作为选主资源锁的组件,如 scheduler,kcm 等,社区也提出了一个保证稳定性的迁移方案:

Migrate all uses of leader-election to use Lease API[5]

❝Currently, Kubernetes components (scheduler, kcm, …) are using leader election that is based on either Endpoints or ConfigMap objects. Given that both of these are watched by different components, this is generating a lot of unnecessary load.

We should migrate all leader-election to use Lease API (that was designed exactly for this case).

The tricky part is that in order to do that safely, I think the only reasonable way of doing that would be to:

in the first phase switch components to:

acquire lock on the current object (endpoints or configmap) acquire lock on the new lease object only the proceed with its regular functionality [ loosing any of those two, should result in panicing and restarting the component]

in the second phase (release after) remove point 1 @kubernetes/sig-scalability-bugs

也就是说,在阶段的过渡期,需要同时获取 Endpoints/Configmap 和 Lease 两种类型的资源锁(MultiLock),任一资源锁的丢失都会导致组件的重启。当更新锁时,先更新 Endpoints/Configmap,再更新 Lease。在判断丢失时,如果两种资源都有 holder 但是不一致,则返回异常,重新选主。

在第二阶段,可以移除 Endpoints/Configmap 的资源锁,完成向 Lease 资源锁的迁移。为了支持该迁移方案,社区在 1.17 版本新增了 EndpointsLeasesResourceLock 和 ConfigMapsLeasesResourceLock,完成过渡期的锁获取。没有 svc 的 endpoints 通过 leader 创建的。

migrate leader election to lease API[6]

同时,在 1.17 版本中,将 controller-manager 和 scheduler 的资源锁从 Endpoints 切换成 EndpointsLeases:

Migrate components to EndpointsLeases leader election lock[7]

在 1.20 版本中,将 controller-manager 和 scheduler 的资源锁从 EndpointsLeases 切换成 Leases:

Migrate scheduler, controller-manager and cloud-controller-manager to use LeaseLock[8]

而在 1.24 版本,社区也彻底移除了对 Endpoints/ConfigMaps 作为选主资源锁的支持。

Remove support for Endpoints and ConfigMaps lock from leader election[9]

总结

综上,社区整体的趋势是使用 Leases 类型替代原先 Endpoints/Configmaps 作为资源锁的方式。而对于在低版本下使用 Endpoints/Configmaps 作为选主实现的 K8S,很多组件也采取了不处理这些选主的 Endpoints/Configmaps 的方式,以屏蔽频繁的 Endpoints 更新带来的事件处理开销,如:

k8s: stop watching for kubernetes management endpoints[10] (cilium)

因此,对于需要选主的组件,可以采取如下方式来规避:

❝使用 Leases Object 作为选主资源锁,这也符合社区的演进趋势; 对着这个组件(原生调度器),配置 resourceLock 类型就行。

引用链接

master leader election shouldn’t consume O(nodes) watch bandwidth: https://github.com/kubernetes/kubernetes/issues/34627

[2]Leader election causes a lot of endpoint updates: https://github.com/kubernetes/kubernetes/issues/23812

[3]Add Lease implementation to leaderelection package: https://github.com/kubernetes/kubernetes/pull/70778

[4]#80289: https://github.com/kubernetes/kubernetes/issues/80289

[5]Migrate all uses of leader-election to use Lease API: https://github.com/kubernetes/kubernetes/issues/80289

[6]migrate leader election to lease API: https://github.com/kubernetes/kubernetes/pull/81030

[7]Migrate components to EndpointsLeases leader election lock: https://github.com/kubernetes/kubernetes/pull/84084

[8]Migrate scheduler, controller-manager and cloud-controller-manager to use LeaseLock: https://github.com/kubernetes/kubernetes/pull/94603

[9]Remove support for Endpoints and ConfigMaps lock from leader election: https://github.com/kubernetes/kubernetes/pull/106852

[10]k8s: stop watching for kubernetes management endpoints: https://github.com/cilium/cilium/pull/5448