Erda 开源地址: https://github.com/erda-project/erda

Erda cloud 官网:Erda Cloud

简介

我们知道再k8s中,Service用来对一组pod做负载均衡,默认情况下访问Service,流量会均衡的被调度到后端的pod中,但是再实际的业务场景中,节点可能是分散在不同的地方,比如多AZ场景下的部署,边缘场景下的部署,我们更希望访问距离自己近的Pod,要求是在云上,由于跨AZ的流量是收费的,所以将流量在同一个AZ中闭环是一个合理的需求,在k8s 1.17版本之后引入了 Service Topology的特性,用于实现Service级别的拓扑感知。

实现原理

Service的实际实现原理是kube-proxy监听service对应的endpoint资源,然后通过ipvs或者iptables去节点上设置对应的负载均衡策略,如果要让节点根据自己的拓扑来决定请求发到哪里,肯定需要监听的这个endpoint带上拓扑信息,然后跟本节点的node信息做一个对比,然后来设置差异化的负载均衡策略。为此,1.17版本引入了EndpointSlice的资源,用于描述service的拓扑,如下是一个例子。

可以看到改对象会给每一endpoint打上相应的拓扑信息,剩下的事情就交给kube-proxy,去过滤出自己需要的endpint信息。

addressType: IPv4

apiVersion: discovery.k8s.io/v1beta1

endpoints:

- addresses:

- 10.244.2.2

conditions:

ready: true

targetRef:

kind: Pod

name: ud-test-beijing-btwbn-78db8b7786-58wmx

namespace: default

resourceVersion: "3112"

uid: 29b6d9c1-4e31-4290-9136-604038ec2b36

topology:

kubernetes.io/hostname: kind-worker2

topology.kubernetes.io/zone: beijing

- addresses:

- 10.244.1.2

conditions:

ready: true

targetRef:

kind: Pod

name: ud-test-hangzhou-btwbn-5c7b5f545d-mggbd

namespace: default

resourceVersion: "3047"

uid: 35391bde-a948-4625-a692-891a0cd3a353

topology:

kubernetes.io/hostname: kind-worker

topology.kubernetes.io/zone: hangzhou

kind: EndpointSlice约束条件

- k8s版本再1.17及其以上

- kube-proxy以ipvs或者iptables模式来运行

- k8s组件开启了- --feature-gates=ServiceTopology=true,EndpointSlice=true的特性

- 目前仅支持如下的拓扑标签

http://topology.kubernetes.io/zone

使用实例

我们创建两个deploment,一个带topologykey的Service,然后来观察创建的ipvs规则是否是按照我们约定的拓扑结构来创建的。

创建deployment

beijing_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: ud-test

apps.openyurt.io/pool-name: beijing

name: ud-test-beijing-btwbn

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

app: ud-test

apps.openyurt.io/pool-name: beijing

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: ud-test

apps.openyurt.io/pool-name: beijing

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

containers:

- image: registry.cn-hangzhou.aliyuncs.com/dice-third-party/nginx:1.14.0

imagePullPolicy: Always

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30hangzhou_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: ud-test

apps.openyurt.io/pool-name: hangzhou

name: ud-test-hangzhou-btwbn

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

app: ud-test

apps.openyurt.io/pool-name: hangzhou

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: ud-test

apps.openyurt.io/pool-name: hangzhou

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- hangzhou

containers:

- image: registry.cn-hangzhou.aliyuncs.com/dice-third-party/nginx:1.14.0

imagePullPolicy: Always

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30创建service

增加了topoloKeys的字段,用于给kube-proxy做拓扑感知使用,kube-proxy会根据自身的该标签来过滤endpointslice中的endpoin信息设置自己的负载均衡策略,所以相应的节点上也需要打上http://topology.kubernetes.io/zone的标签。

apiVersion: v1

kind: Service

metadata:

name: ud-test

labels:

app: ud-test

spec:

ports:

- name: ud-test

port: 80

targetPort: 80

selector:

app: ud-test

topologyKeys:

- "topology.kubernetes.io/zone"

# - "*"观察效果

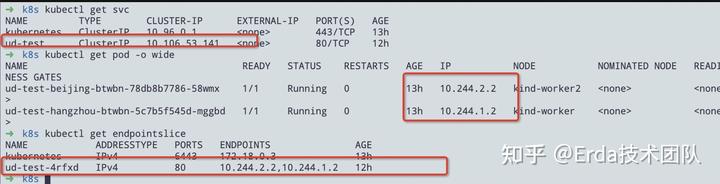

创建完成后,我们们可以看到一个service资源,对应两个pod资源,同时会生成一个EndpointSlice资源。

然后我们登陆到有http://topology.kubernetes.io/zone节点上的去查看对应的ipvs规则

可以看到ipvs规则对应的后端pod的ip只有对应节点的标签过滤出来的endpoint信息

如果节点没有打http://topology.kubernetes.io/zone呢,那就不会有任何后端信息。这也不合理,所有k8s给了一个*选项,如果没有匹配到标签,则后端是所有的endpoint集合。

附Service Topology代码实现

// FilterTopologyEndpoint returns the appropriate endpoints based on the cluster

// topology.

// This uses the current node's labels, which contain topology information, and

// the required topologyKeys to find appropriate endpoints. If both the endpoint's

// topology and the current node have matching values for topologyKeys[0], the

// endpoint will be chosen. If no endpoints are chosen, toplogyKeys[1] will be

// considered, and so on. If either the node or the endpoint do not have values

// for a key, it is considered to not match.

//

// If topologyKeys is specified, but no endpoints are chosen for any key, the

// the service has no viable endpoints for clients on this node, and connections

// should fail.

//

// The special key "*" may be used as the last entry in topologyKeys to indicate

// "any endpoint" is acceptable.

//

// If topologyKeys is not specified or empty, no topology constraints will be

// applied and this will return all endpoints.

func FilterTopologyEndpoint(nodeLabels map[string]string, topologyKeys []string, endpoints []Endpoint) []Endpoint {

// Do not filter endpoints if service has no topology keys.

if len(topologyKeys) == {

return endpoints

}

filteredEndpoint := []Endpoint{}

if len(nodeLabels) == {

if topologyKeys[len(topologyKeys)-1] == v1.TopologyKeyAny {

// edge case: include all endpoints if topology key "Any" specified

// when we cannot determine current node's topology.

return endpoints

}

// edge case: do not include any endpoints if topology key "Any" is

// not specified when we cannot determine current node's topology.

return filteredEndpoint

}

for _, key := range topologyKeys {

if key == v1.TopologyKeyAny {

return endpoints

}

topologyValue, found := nodeLabels[key]

if !found {

continue

}

for _, ep := range endpoints {

topology := ep.GetTopology()

if value, found := topology[key]; found && value == topologyValue {

filteredEndpoint = append(filteredEndpoint, ep)

}

}

if len(filteredEndpoint) > {

return filteredEndpoint

}

}

return filteredEndpoint

}