Flink local模式部署

flink的local模式运行在单个jvm中。同时local方便快速测试。

需求:

- Java 1.8.x or higher,

- ssh

1、下载

https://flink.apache.org/downloads.html

2、解压

[root@hadoop01 local]# tar -zxvf /home/flink-1.9.1-bin-scala_2.11.tgz -C /usr/local/

[root@hadoop01 local]# cd ./flink-1.9.1/3、配置环境变量

export FLINK_HOME=/usr/local/flink-1.9.1/

export PATH=$PATH:$JAVA_HOME/bin:$ZK_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$KAFKA_HOME/bin:$FLINK_HOME/bin:

4、刷新环境变量

[root@hadoop01 flink-1.9.1]# source /etc/profile

[root@hadoop01 flink-1.9.1]# which flink5、启动测试

./bin/start-cluster.sh6、测试:

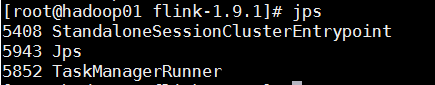

jps

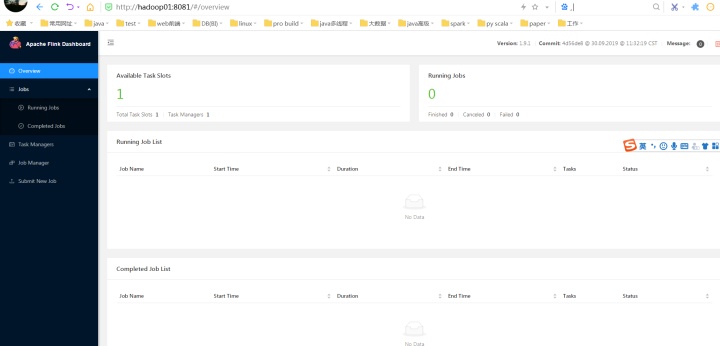

访问web地址:http://hadoop01:8081

启动流作业:

监控输入数据

[root@hadoop01 flink-1.9.1]# nc -l 9000

lorem ipsum

ipsum ipsum ipsum

bye启动job

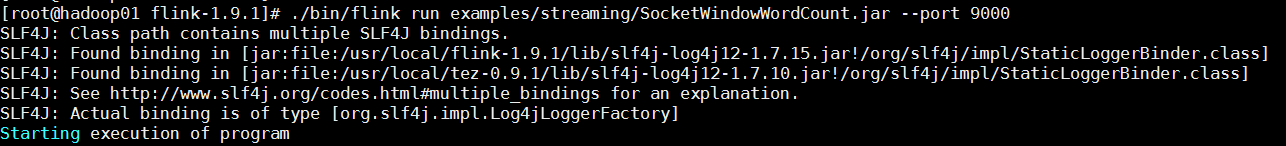

[root@hadoop01 flink-1.9.1]# ./bin/flink run examples/streaming/SocketWindowWordCount.jar --port 9000

监控结果

[root@hadoop01 ~]# tail -f /usr/local/flink-1.9.1/log/flink-*-taskexecutor-*.out

lorem : 1

bye : 1

ipsum : 4启动批次作业:

[root@hadoop01 flink-1.9.1]# flink run ./examples/batch/WordCount.jar --input /home/words --output /home/1907/out/00

Starting execution of program

Program execution finished

Job with JobID 8b258e1432dde89060c4acbac85f57d4 has finished.

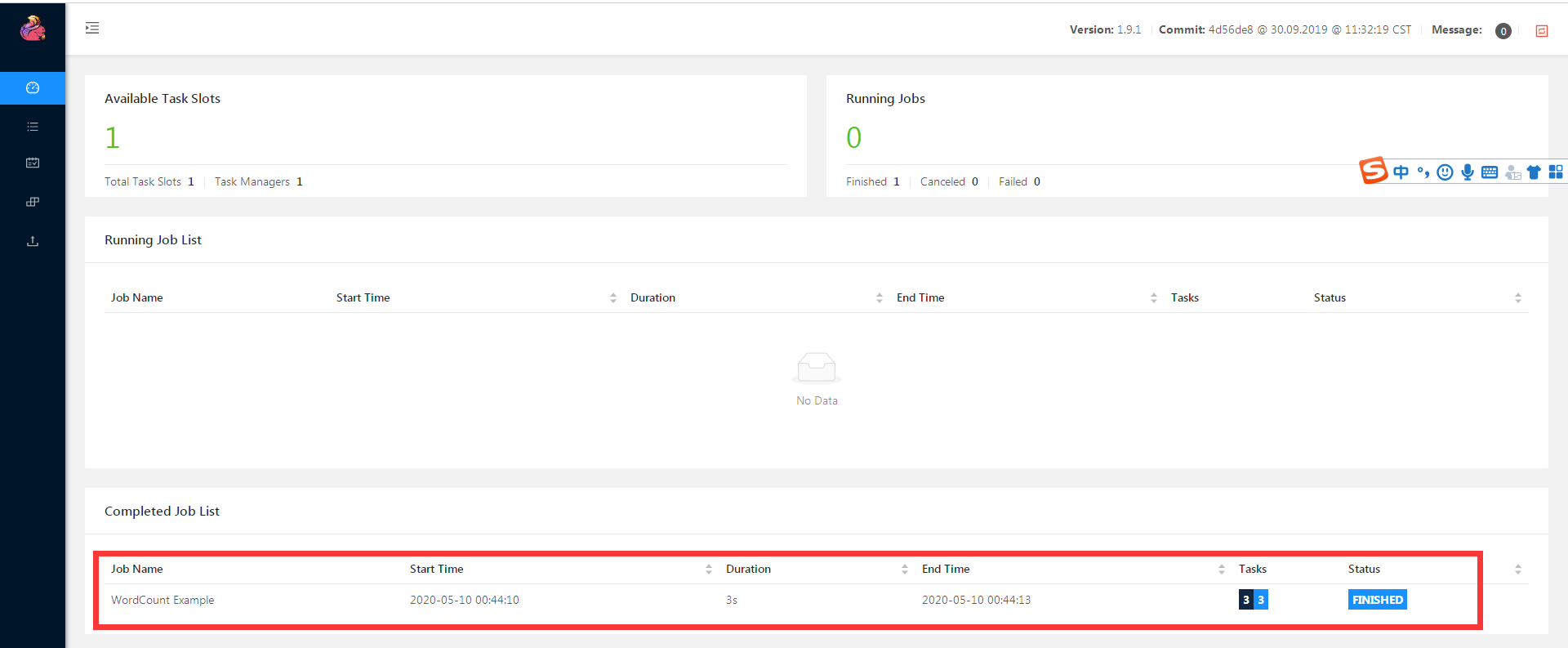

Job Runtime: 3528 msweb控制台如下图:

7、关闭local模式

./bin/stop-cluster.sh